Ron Sielinkski

Dec 3, 2025

Introduction

Over the past year, a new generation of answer engines, SearchGPT, Perplexity, Claude.ai, and others, has begun reshaping how people discover information.

These systems don’t just find answers. They compose them, citing sources directly in response.

For businesses that depend on organic visibility, this shift creates an urgent question:

How do you optimize an engine that does not behave like Google?

Our recent analysis provides a clear, and surprising, answer: Every major answer engine builds its own retrieval index, and those retrieval systems disagree with each other almost all the time. The key takeaway: the tactics that work for Google often don’t work for Perplexity, and neither reliably predicts what SearchGPT will cite.

Let’s dig into the data.

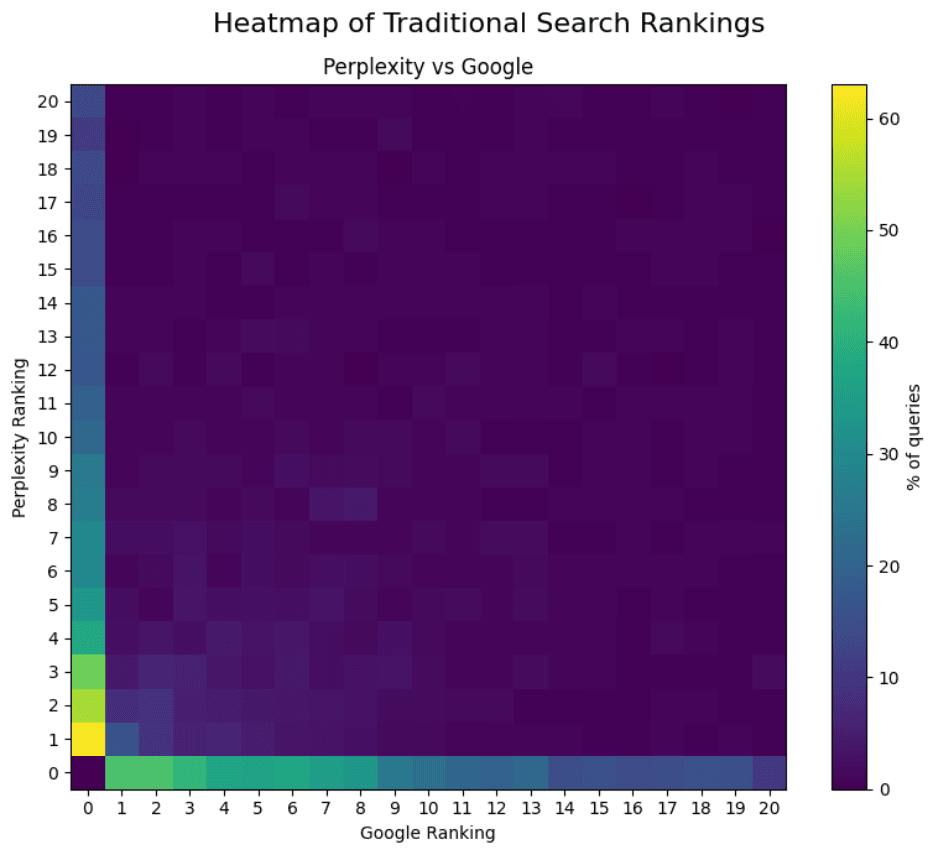

The Top-20 Results for Google and Perplexity Disagree 87% of the Time

Perplexity recently announced a new Search API. Introducing the Perplexity Search API gives developers access to the search index. This index powers Perplexity’s answer engine. Submit a query, and you get the ranked web results of a traditional search engine.

We compared the top-20 traditional search results from Google and Perplexity’s new Search API for nearly 1,000 queries.

Here’s what we found: Only 13% of results overlap in any ranking position.

In other words, 87% of the URLs that Perplexity ranks in its top-20 never appear in Google’s top-20, and vice versa.

A heatmap illustrates the difference:

Traditional Search Rankings vs Generative Search

The diagonal (where identical rankings would appear) is nearly empty.

The big purple void shows that the two engines almost never assign the same rank to URLs.

Row 0 and column 0 (the marginal totals) show the percentage of URLs from one engine that appear anywhere in the other engine’s top-20. Outside the top-ranked URLs (in yellow and green), the two engines almost always select different URLs.

This isn’t a minor difference: It a different worldview. If you optimize Google only, you are going to be invisible on Perplexity nearly 90% of the time.

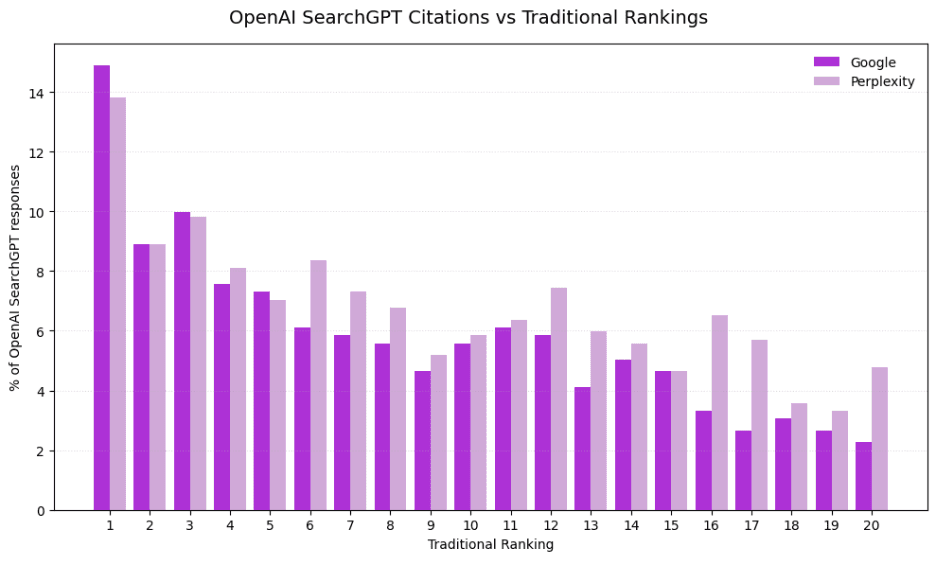

SearchGPT Cites High-Ranking Traditional Pages from Both Engines

Next, we tested how often OpenAI SearchGPT cites the URLs that appear in the top-20 results of either Google or Perplexity.

Key finding:

Google #1 results are cited ~15% of the time.

Perplexity #1 results are cited ~14% of the time.

Lower positions drop off but spikes (because generative engines value content quality, not rank position).

So, yes, traditional rankings offer some predictive signals. But here’s the twist…

To Earn Both Signals, You Must Be the Exception

Since Google and Perplexity disagree 87% of the time, a natural implication follows:

The only way to earn the cumulative effect—ranking well on both engines and therefore increasing your odds of being cited by SearchGPT—is to be one of the rare sites that appear in both top-20 lists.

Most brands simply don’t.

This is why some websites appear often in SearchGPT responses despite mediocre Google rankings, while others dominate Google but are absent from generative answers.

Perplexity and SearchGPT Also Disagree With Each Other

If Google and Perplexity disagree with 87% of the time, maybe SearchGPT and Perplexity’s answer engine more aligned?

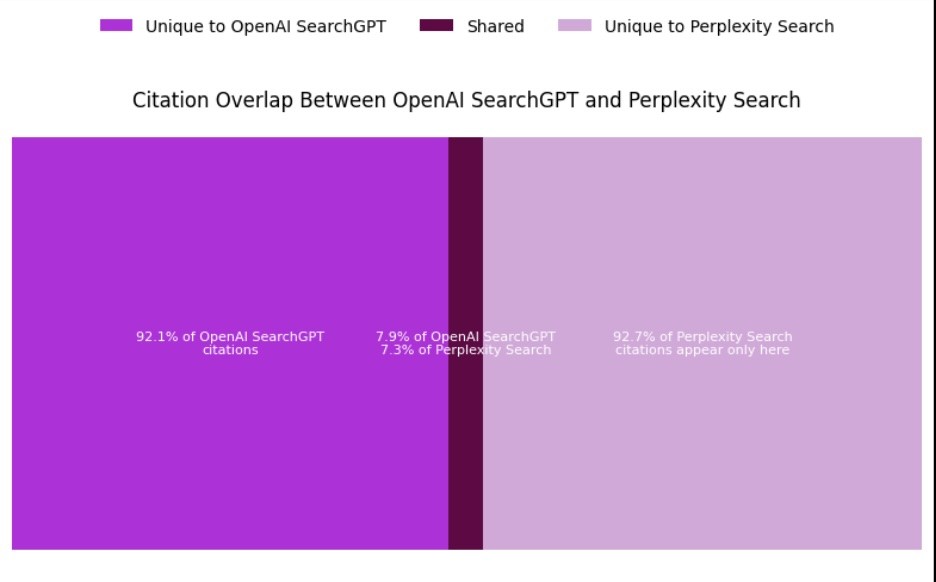

We tested that, too. On a per-query basis, SearchGPT’s citations and Perplexity’s citations overlap only 7-8% of the time.

That means:

Perplexity chooses one set of URLs to cite.

SearchGPT chooses a different set.

Over 90% of the URLs that the generative engines cite are unique to each platform.

The result, again, is a different worldview.

Every answer engine uses its own retrieval index, its own ranking logic, its own filtering heuristics, and its own model-driven content evaluation.

There is no “standard” search result feeding all these models.

The New Reality: Multi-Engine Visibility Is the Key to Winning AI Search

Here’s the strategic takeaway.

Old SEO Mindset: “Optimize for Google and everything else will follow.”

New GSO (Generative Search Optimization) Reality: Optimize Google, Perplexity, Bing, and answer engines separately. They do not agree, and they do not share the same retrieval mechanisms.

Every generative engine:

Crawls differently

Indexes differently

Ranks differently

Filters differently

Cites differently

If your brand wants to consistently appear in generative answers:

You must be visible across multiple retrieval ecosystems, not just Google.

You must understand how each engine evaluates authority.

You must track not only rankings but citations and co-citations.

You must optimize content structure, schema, clarity, and relevance—not just backlink signals.

This is the heart of Generative Search Optimization (GSO): It’s how the new search landscape works.

FAQs

How much do Google and Perplexity search results actually differ?

Our analysis of nearly 1,000 queries found that Google and Perplexity disagree on 87% of their top-20 results. Only 13% of URLs that Perplexity ranks ever appear in Google’s top-20, and vice versa. This represents fundamentally different “worldviews” about what content deserves to rank.

Does ranking well on Google help you get cited by AI answer engines like SearchGPT?

There’s some correlation, but it’s weaker than you might expect. Google’s #1 ranked results are cited by SearchGPT only about 15% of the time, while Perplexity’s #1 results are cited roughly 14% of the time. Generative engines value content quality over rank position, which explains occasional spikes at lower positions.

How often do SearchGPT and Perplexity cite the same sources?

Remarkably rarely. Our research shows SearchGPT and Perplexity citations overlap only 7-8% of the time on a per-query basis. Over 90% of the URLs that each generative engine cites are unique to that platform.

Why do answer engines disagree so much with each other?

Every answer engine uses its own independent retrieval index, ranking logic, filtering heuristics, and model-driven content evaluation. There is no “standard” search result feeding all these models—they each crawl, index, rank, filter, and cite differently.

What is Generative Search Optimization (GSO)?

GSO is the new approach to visibility that acknowledges traditional SEO alone is insufficient. It involves optimizing for Google, Perplexity, Bing, and other answer engines separately, since they don’t share the same retrieval mechanisms. GSO focuses on content structure, schema, clarity, relevance, and multi-engine visibility—not just backlink signals.

What should brands do to improve their AI search visibility?

Brands need to: (1) Achieve visibility across multiple retrieval ecosystems, not just Google; (2) Understand how each engine evaluates authority; (3) Track citations and co-citations, not just rankings; and (4) Optimize content structure, schema, clarity, and relevance beyond traditional backlink strategies.